Notes on Deep Reinforcement Learning

by 장승환

In this page I summarize in a succinct and straighforward fashion what I learn from Deep Reinforcement Learning course by Sergey Levine, along with my own thoughts and related resources. I will update this page frequently, like every week, until it’s complete.

Acronyms

- RL: Reinforcement Learning

- DRL Deep Reinfocement Learning

(8/23) Introduction to Reinforcement Learning

Markov chain

$\mathscr{M} = (\mathscr{S}, \mathscr{T})$

- $\mathscr{S}$ (state space) / $s \in \mathscr{S}$ (state)

- $\mathscr{T} (“= p”): \mathscr{S} \times \mathscr{S} \rightarrow [0,1]$ (transition operator, linear)

If we set $v_t = (p[S_t = i])_{i \in \mathscr{S}} \in [0,1]^{\vert \mathscr{S}\vert}$, then $v_{t+1} = \mathscr{T} v_t$.

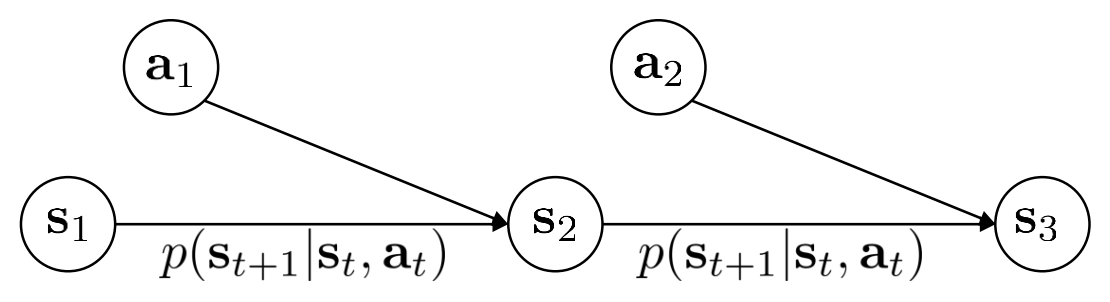

Markov Decision Process

Extension of MC to the decision making setting (popularized by Bellman in 1950’s)

$\mathscr{M} = (\mathscr{S}, \mathscr{A}, \mathscr{T}, r)$

- $\mathscr{S}$ (state space) / $s \in \mathscr{S}$ (state)

- $\mathscr{A}$ (action space) / $a \in \mathscr{A}$ (action)

- $\mathscr{T} : \mathscr{S} \times \mathscr{A} \times \mathscr{S} \rightarrow [0,1]$ (transition operator, tensor!)

If we set $v_t = (p[S_t = j])_{j \in \mathscr{S}} \in [0,1]^{\vert \mathscr{S}\vert}$ and $v_t = (p[S_t = k])_{k \in \mathscr{S}} \in [0,1]^{\vert \mathscr{S}\vert}$, then

To be added..

Subscribe via RSS